Okay, let’s talk about xAI. You’ve probably heard the buzz – another AI company, right? But here’s the thing: this one’s different, and understanding xAI’s mission is crucial. I initially thought it was just another tech startup jumping on the AI bandwagon. But then I dug deeper, and what I found fascinated me. It’s not just about building smarter algorithms; it’s about fundamentally changing how we interact with AI.

Why xAI’s Approach to AI Matters

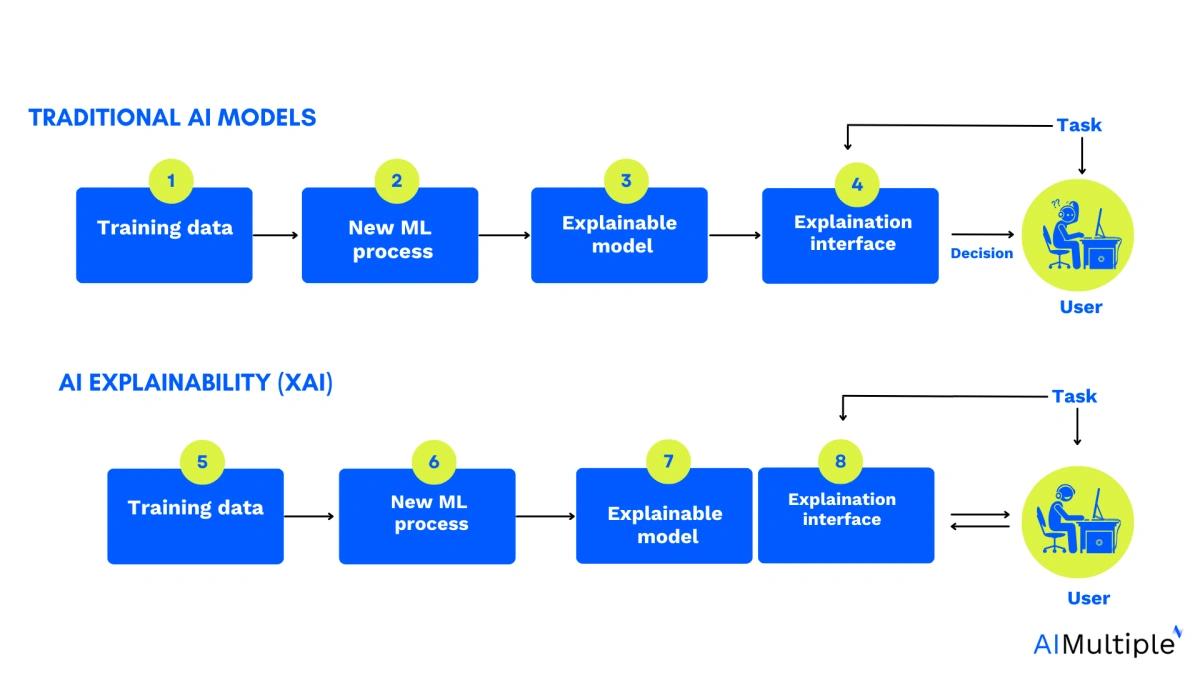

The big question is: why should anyone care? Well, the current AI landscape is dominated by “black box” systems. These systems work, often incredibly well, but understanding why they make certain decisions is a mystery. It’s like having a super-smart assistant who can solve any problem but can’t explain their reasoning. Not ideal, right? xAI, on the other hand, is striving for explainable AI . They aim to build AI systems that are not only intelligent but also transparent and understandable. This isn’t just a nice-to-have feature; it’s crucial for building trust and ensuring responsible AI development.

Here’s the thing: If AI is going to truly transform our lives – from healthcare to finance to self-driving cars – we need to be able to understand how it works. Imagine a doctor relying on an AI to diagnose a patient, but the AI can’t explain its reasoning. Would you trust that diagnosis? Probably not. xAI’s focus on interpretability is essential for AI safety and ethical considerations.

The Team Behind xAI and Their Vision

So, who’s behind this ambitious project? xAI was founded by Elon Musk, and that alone grabs headlines. But it’s the team he’s assembled that’s truly impressive. They’ve pulled together some of the brightest minds in AI research from companies like DeepMind, OpenAI, Google, Microsoft, and the University of Toronto. As per their announcement, their goal is to “build AI systems that are helpful to humanity.” It sounds lofty, but when you look at the expertise involved, you realize it’s not just empty rhetoric. The diverse backgrounds and experiences of the team members bring a unique perspective to tackling complex AI challenges.

The team’s stated mission goes beyond simply creating advanced AI; it aims to develop AI that aligns with human values and goals. This is a critical point. We’re not just talking about building smarter machines; we’re talking about building machines that are beneficial and safe for society. This is a long-term vision, but it’s one that’s essential for ensuring a positive future with AI. This is especially important when considering the potential impact of artificial general intelligence (AGI).

How xAI Plans to Achieve Explainability

Okay, so how exactly does xAI plan to make AI more understandable? Well, they’re focusing on several key areas, including developing new techniques for visualizing and interpreting AI decision-making processes. They’re also exploring ways to build AI models that are inherently more transparent, rather than trying to retrofit explainability onto existing black-box systems. One approach involves creating AI models that provide justifications for their decisions. These justifications could be in the form of natural language explanations or visual representations. The idea is to make it easier for humans to understand the reasoning behind the AI’s actions.

For example, consider an AI system designed to detect fraud. Instead of simply flagging a transaction as suspicious, the AI could provide a detailed explanation of why it believes the transaction is fraudulent. This could include highlighting specific features of the transaction that are unusual or inconsistent with the customer’s past behavior. This approach not only helps humans understand the AI’s reasoning, but also allows them to validate the AI’s decisions and identify potential errors.

The Potential Impact on Various Industries

The implications of xAI’s work are far-reaching. Explainable AI could revolutionize industries like healthcare, finance, and law, where transparency and accountability are paramount. In healthcare, it could lead to more accurate diagnoses and more personalized treatment plans. In finance, it could help prevent fraud and ensure fair lending practices. In law, it could help uncover biases in algorithms and ensure that justice is served fairly. A common mistake I see people make is underestimating the broad reach that AI will have across various business sectors, but the xAI team seems to understand that impact. In this era of growing AI development, having clear ethical considerations will be invaluable.

Furthermore, xAI’s research could also have a significant impact on the development of autonomous systems, such as self-driving cars and drones. If we can understand how these systems make decisions, we can better ensure their safety and reliability. This is particularly important in situations where these systems are making life-or-death decisions. Imagine a self-driving car that suddenly swerves to avoid an obstacle. If we can understand why the car made that decision, we can better assess whether it was the right decision and identify potential areas for improvement. Jobs in AI will require a deep level of trust between people and machines.

Criticisms and Challenges Facing xAI

Of course, xAI’s vision is not without its challenges. Building explainable AI is a complex and technically difficult task. There are also concerns about the potential for misuse. For example, if we can understand how an AI system works, it may be easier to manipulate or exploit it. And let’s be honest, some critics question whether Elon Musk’s involvement might distract from the core scientific goals. I initially thought this was straightforward, but then I realized that true AI safety requires ongoing effort and vigilance.

Despite these challenges, xAI’s commitment to building transparent and understandable AI is a crucial step towards ensuring a positive future for AI. Their work has the potential to transform industries, improve decision-making, and build trust between humans and machines. It’s not just about building smarter AI; it’s about building AI that we can understand and trust.

I think xAI’s focus on transparency is a crucial counterpoint to the black-box approach that dominates so much of the AI world today. It is a breath of fresh air.

FAQ About xAI

What exactly is xAI?

xAI is an artificial intelligence company founded by Elon Musk, focused on making AI more understandable and beneficial to humanity.

Why is explainable AI so important?

It’s vital for building trust, ensuring ethical use, and improving AI safety, especially in critical applications like healthcare and finance.

How is xAI different from other AI companies?

While many focus solely on performance, xAI prioritizes transparency and understanding how AI systems make decisions. Rising interest rates do not effect the need for further AI research.

What are some potential applications of xAI’s work?

Explainable AI could revolutionize industries like healthcare, finance, and law, leading to more accurate diagnoses, fraud prevention, and fair lending practices.

What if I’m worried about AI taking over the world?

That’s a valid concern! xAI’s focus on aligning AI with human values is aimed at mitigating that risk and ensuring AI benefits society as a whole. Keep up with the latest AI news and AI breakthroughs .

Where can I learn more about xAI?

Keep an eye on their official website (when it’s fully launched) and follow industry news for updates on their research and progress.